What is Life? The Physical Aspect of the Living Cell

This short book, written by Schrodinger, one of the fathers of quantum mechanics, influenced the discovery of DNA. In 1943, in this book, he predicted the presence of ‘an aperiodic crystal’ and how it is a ‘code-script’ for how organisms work. Within 10 years, in 1953, DNA was discovered and it is both aperiodic and code-script of life! He also shows how life is unintuitive according to known physical laws, how it feeds on ‘negative entropy’ and how we need different type of laws to explain life. Epilogue On Determinism and Free Will is a cherry on the top and gives a physicist view on the topic. Written for dilettantes like myself (Schrodinger himself being one), this book is highly readable and relevant.

Yellow highlights/annotations are my own. You can disable them.Based on lectures delivered under the auspices of the Dublin Institute for Advanced Studies at Trinity College, Dublin, in February 1943. To the memory of My Parents

Preface

A scientist is supposed to have a complete and thorough I of knowledge, at first hand, of some subjects and, therefore, is usually expected not to write on any topic of which he is not a life, master. This is regarded as a matter of noblesse oblige. For the present purpose I beg to renounce the noblesse, if any, and to be the freed of the ensuing obligation. My excuse is as follows: We have inherited from our forefathers the keen longing for unified, all-embracing knowledge. The very name given to the highest institutions of learning reminds us, that from antiquity to and throughout many centuries the universal aspect has been the only one to be given full credit. But the spread, both in and width and depth, of the multifarious branches of knowledge by during the last hundred odd years has confronted us with a queer dilemma. We feel clearly that we are only now beginning to acquire reliable material for welding together the sum total of all that is known into a whole; but, on the other hand, it has become next to impossible for a single mind fully to command more than a small specialized portion of it. I can see no other escape from this dilemma (lest our true who aim be lost for ever) than that some of us should venture to embark on a synthesis of facts and theories, albeit with second-hand and incomplete knowledge of some of them – and at the risk of making fools of ourselves. So much for my apology. The difficulties of language are not negligible. One’s native speech is a closely fitting garment, and one never feels quite at ease when it is not immediately available and has to be replaced by another. My thanks are due to Dr Inkster (Trinity College, Dublin), to Dr Padraig Browne (St Patrick’s College, Maynooth) and, last but not least, to Mr S. C. Roberts. They were put to great trouble to fit the new garment on me and to even greater trouble by my occasional reluctance to give up some ‘original’ fashion of my own. Should some of it have survived the mitigating tendency of my friends, it is to be put at my door, not at theirs. The head-lines of the numerous sections were originally intended to be marginal summaries, and the text of every chapter should be read in continuo.

E.S.

Dublin

September 1944

Homo liber nulla de re minus quam de morte cogitat; et ejus sapientia non mortis sed vitae meditatio est. SPINOZA’S Ethics, Pt IV, Prop. 67

(There is nothing over which a free man ponders less than death; his wisdom is, to meditate not on death but on life.)

Chapter 1: The Classical Physicist’s Approach to the Subject

This little book arose from a course of public lectures, delivered by a theoretical physicist to an audience of about four hundred which did not substantially dwindle, though warned at the outset that the subject-matter was a difficult one and that the lectures could not be termed popular, even though the physicist’s most dreaded weapon, mathematical deduction, would hardly be utilized. The reason for this was not that the subject was simple enough to be explained without mathematics, but rather that it was much too involved to be fully accessible to mathematics. Another feature which at least induced a semblance of popularity was the lecturer’s intention to make clear the fundamental idea, which hovers between biology and physics, to both the physicist and the biologist.

For actually, in spite of the variety of topics involved, the whole enterprise is intended to convey one idea only – one small comment on a large and important question. In order not to lose our way, it may be useful to outline the plan very briefly in advance. The large and important and very much discussed question is: How can the events in space and time which take place within the spatial boundary of a living organism be accounted for by physics and chemistry? The preliminary answer which this little book will endeavor to expound and establish can be summarized as follows: The obvious inability of present-day physics and chemistry to account for such events is no reason at all for doubting that they can be accounted for by those sciences.

Statistical Physics. The Fundamental Difference in Structure

That would be a very trivial remark if it were meant only to stimulate the hope of achieving in the future what has not been achieved in the past. But the meaning is very much more positive, viz. that the inability, up to the present moment, is amply accounted for. Today, thanks to the ingenious work of biologists, mainly of geneticists, during the last thirty or forty years, enough is known about the actual material structure of organisms and about their functioning to state that, and to tell precisely why present-day physics and chemistry could not possibly account for what happens in space and time within a living organism.

The arrangements of the atoms in the most vital parts of an organism and the interplay of these arrangements differ in a fundamental way from all those arrangements of atoms which physicists and chemists have hitherto made the object of their experimental and theoretical research. Yet the difference which I have just termed fundamental is of such a kind that it might easily appear slight to anyone except a physicist who is thoroughly imbued with the knowledge that the laws of physics and chemistry are statistical throughout. For it is in relation to the statistical point of view that the structure of the vital parts of living organisms differs so entirely from that of any piece of matter that we physicists and chemists have ever handled physically in our laboratories or mentally at our writing desks. It is well-nigh unthinkable that the laws and regularities thus discovered should happen to apply immediately to the behaviour of systems which do not exhibit the structure on which those laws and regularities are based.

The non-physicist cannot be expected even to grasp let alone to appreciate the relevance of the difference in ‘statistical structure’ stated in terms so abstract as I have just used. To give the statement life and colour, let me anticipate what will be explained in much more detail later, namely, that the most essential part of a living cell – the chromosome fibre may suitably be called an aperiodic crystal. In physics we have dealt hitherto only with periodic crystals. To a humble physicist’s mind, these are very interesting and complicated objects; they constitute one of the most fascinating and complex material structures by which inanimate nature puzzles his wits. Yet, compared with the aperiodic crystal, they are rather plain and dull. The difference in structure is of the same kind as that between an ordinary wallpaper in which the same pattern is repeated again and again in regular periodicity and a masterpiece of embroidery, say a Raphael tapestry, which shows no dull repetition, but an elaborate, coherent, meaningful design traced by the great master.

In calling the periodic crystal one of the most complex objects of his research, I had in mind the physicist proper. Organic chemistry, indeed, in investigating more and more complicated molecules, has come very much nearer to that ‘aperiodic crystal’ which, in my opinion, is the material carrier of life. And therefore it is small wonder that the organic chemist has already made large and important contributions to the problem of life, whereas the physicist has made next to none.

The Naive Physicist’s Approach to the Subject

After having thus indicated very briefly the general idea – or rather the ultimate scope – of our investigation, let me describe the line of attack.

I propose to develop first what you might call ‘a naive physicist’s ideas about organisms, that is, the ideas which might arise in the mind of a physicist who, after having learnt his physics and, more especially, the statistical foundation of his science, begins to think about organisms and about the way they behave and function and who comes to ask himself conscientiously whether he, from what he has learnt, from the point of view of his comparatively simple and clear and humble science, can make any relevant contributions to the question. It will turn out that he can.

The next step must be to compare his theoretical anticipations with the biological facts. It will then turn out that – though on the whole his ideas seem quite sensible – they need to be appreciably amended. In this way we shall gradually approach the correct view – or, to put it more modestly, the one that I propose as the correct one. Even if I should be right in this, I do not know whether my way of approach is really the best and simplest. But, in short, it was mine. The ‘naive physicist’ was myself. And I could not find any better or clearer way towards the goal than my own crooked one.

Why are the Atoms so Small?

A good method of developing ‘the naive physicist’s ideas’ is to start from the odd, almost ludicrous, question: Why are atoms so small? To begin with, they are very small indeed. Every little piece of matter handled in everyday life contains an enormous number of them. Many examples have been devised to bring this fact home to an audience, none of them more impressive than the one used by Lord Kelvin:

Suppose that you could mark the molecules in a glass of water; then pour the contents of the glass into the ocean and stir the latter thoroughly so as to distribute the marked molecules uniformly throughout the seven seas; if then you took a glass of water anywhere out of the ocean, you would find in it about a hundred of your marked molecules. The actual sizes of atoms lie between about 1/5000 and 1/2000 the wave-length of yellow light. The comparison is significant, because the wave-length roughly indicates the dimensions of the smallest grain still recognizable in the microscope. Thus it will be seen that such a grain still contains thousands of millions of atoms.

Now, why are atoms so small? Clearly, the question is an evasion. For it is not really aimed at the size of the atoms. It is concerned with the size of organisms, more particularly with the size of our own corporeal selves. Indeed, the atom is small, when referred to our civic unit of length, say the yard or the metre. In atomic physics one is accustomed to use the so-called Angstrom (abbr. A), which is the 1010th part of a metre, or in decimal notation 0.0000000001 metre. Atomic diameters range between 1 and 2A. Now those civic units (in relation to which the atoms are so small) are closely related to the size of our bodies. There is a story tracing the yard back to the humour of an English king whom his councillors asked what unit to adopt – and he stretched out his arm sideways and said: ‘Take the distance from the middle of my chest to my fingertips, that will do all right.’ True or not, the story is significant for our purpose. The king would naturally indicate a length comparable with that of his own body, knowing that anything else would be very inconvenient. With all his predilection for the Angstrom unit, the physicist prefers to be told that his new suit will require six and a half yards of tweed – rather than sixty-five thousand millions of Angstroms of tweed.

It thus being settled that our question really aims at the ratio of two lengths – that of our body and that of the atom – with an incontestable priority of independent existence on the side of the atom, the question truly reads: Why must our bodies be so large compared with the atom? I can imagine that many a keen student of physics or chemistry may have deplored the fact that everyone of our sense organs, forming a more or less substantial part of our body and hence (in view of the magnitude of the said ratio) being itself composed of innumerable atoms, is much too coarse to be affected by the impact of a single atom. We cannot see or feel or hear the single atoms. Our hypotheses with regard to them differ widely from the immediate findings of our gross sense organs and cannot be put to the test of direct inspection. Must that be so? Is there an intrinsic reason for it? Can we trace back this state of affairs to some kind of first principle, in order to ascertain and to understand why nothing else is compatible with the very laws of Nature? Now this, for once, is a problem which the physicist is able to clear up completely. The answer to all the queries is in the affirmative.

The Working of an Organism Requires Exact Physical Laws

If it were not so, if we were organisms so sensitive that a single atom, or even a few atoms, could make a perceptible impression on our senses – Heavens, what would life be like! To stress one point: an organism of that kind would most certainly not be capable of developing the kind of orderly thought which, after passing through a long sequence of earlier stages, ultimately results in forming, among many other ideas, the idea of an atom.

Even though we select this one point, the following considerations would essentially apply also to the functioning of organs other than the brain and the sensorial system. Nevertheless, the one and only thing of paramount interest to us in ourselves is, that we feel and think and perceive. To the physiological process which is responsible for thought and sense all the others play an auxiliary part, at least from the human point of view, if not from that of purely objective biology. Moreover, it will greatly facilitate our task to choose for investigation the process which is closely accompanied by subjective events, even though we are ignorant of the true nature of this close parallelism. Indeed, in my view, it lies outside the range of natural science and very probably of human understanding altogether.

We are thus faced with the following question: Why should an organ like our brain, with the sensorial system attached to it, of necessity consist of an enormous number of atoms, in order that its physically changing state should be in close and intimate correspondence with a highly developed thought? On what grounds is the latter task of the said organ incompatible with being, as a whole or in some of its peripheral parts which interact directly with the environment, a mechanism sufficiently refined and sensitive to respond to and register the impact of a single atom from outside?

The reason for this is, that what we call thought (1) is itself an orderly thing, and (2) can only be applied to material, i.e. to perceptions or experiences, which have a certain degree of orderliness. This has two consequences. First, a physical organization, to be in close correspondence with thought (as my brain is with my thought) must be a very well-ordered organization, and that means that the events that happen within it must obey strict physical laws, at least to a very high degree of accuracy. Secondly, the physical impressions made upon that physically well-organized system by other bodies from outside, obviously correspond to the perception and experience of the corresponding thought, forming its material, as I have called it. Therefore, the physical interactions between our system and others must, as a rule, themselves possess a certain degree of physical orderliness, that is to say, they too must obey strict physical laws to a certain degree of accuracy.

Physical Laws Rest on Atomic Statistics and are Therefore Only Approximate

And why could all this not be fulfilled in the case of an organism composed of a moderate number of atoms only and sensitive already to the impact of one or a few atoms only?

Because we know all atoms to perform all the time a completely disorderly heat motion, which, so to speak, opposes itself to their orderly behaviour and does not allow the events that happen between a small number of atoms to enrol themselves according to any recognizable laws. Only in the co-operation of an enormously large number of atoms do statistical laws begin to operate and control the behaviour of these assemblies with an accuracy increasing as the number of atoms involved increases. It is in that way that the events acquire truly orderly features. All the physical and chemical laws that are known to play an important part in the life of organisms are of this statistical kind; any other kind of lawfulness and orderliness that one might think of is being perpetually disturbed and made inoperative by the unceasing heat motion of the atoms.

Their Precision is Based on the Large of Number of Atoms Intervening

First Example (Paramagnetism)

Let me try to illustrate this by a few examples, picked somewhat at random out of thousands, and possibly not just the best ones to appeal to a reader who is learning for the first time about this condition of things – a condition which in modern physics and chemistry is as fundamental as, say, the fact that organisms are composed of cells is in biology, or as Newton’s Law in astronomy, or even as the series of integers, 1, 2, 3, 4, 5, … in mathematics. An entire newcomer should not expect to obtain from the following few pages a full understanding and appreciation of the subject, which is associated with the illustrious names of Ludwig Boltzmann and Willard Gibbs and treated in textbooks under the name of ‘statistical thermodynamics’.

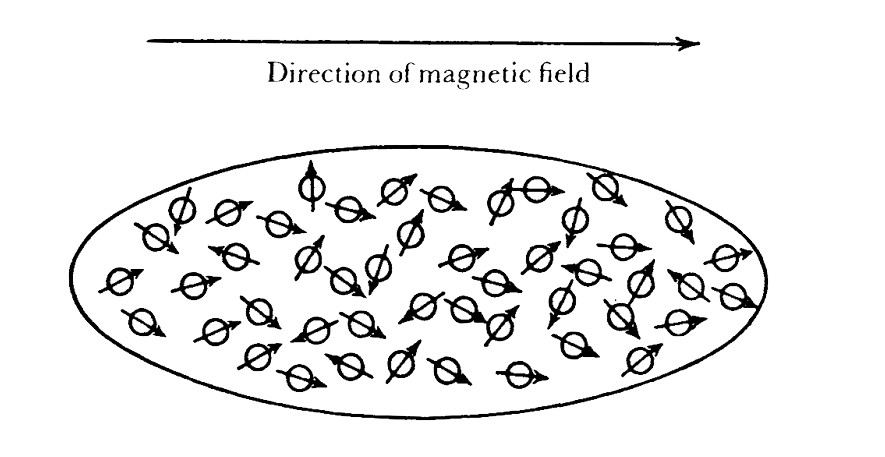

Figure 1: Paramagnetism

If you fill an oblong quartz tube with oxygen gas and put it into a magnetic field, you find that the gas is magnetized. The magnetization is due to the fact that the oxygen molecules are little magnets and tend to orientate themselves parallel to the field, like a compass needle. But you must not think that they actually all turn parallel. For if you double the field, you get double the magnetization in your oxygen body, and that proportionality goes on to extremely high field strengths, the magnetization increasing at the rate of the field you apply. This is a particularly clear example of a purely statistical law. The orientation the field tends to produce is continually counteracted by the heat motion, which works for random orientation. The effect of this striving is, actually, only a small preference for acute over obtuse angles between the dipole axes and the field. Though the single atoms change their orientation incessantly, they produce on the average (owing to their enormous number) a constant small preponderance of orientation in the direction of the field and proportional to it. This ingenious explanation is due to the French physicist P. Langevin. It can be checked in the following way.

If the observed weak magnetization is really the outcome of rival tendencies, namely, the magnetic field, which aims at combing all the molecules parallel, and the heat motion, which makes for random orientation, then it ought to be possible to increase the magnetization by weakening the heat motion, that is to say, by lowering the temperature, instead of reinforcing the field. That is confirmed by experiment, which gives the magnetization inversely proportional to the absolute temperature, in quantitative agreement with theory (Curie’s law). Modern equipment even enables us, by lowering the temperature, to reduce the heat motion to such insignificance that the orientating tendency of the magnetic field can assert itself, if not completely, at least sufficiently to produce a substantial fraction of ‘complete magnetization’. In this case we no longer expect that double the field strength will double the magnetization, but that the latter will increase less and less with increasing field, approaching what is called ‘saturation’. This expectation too is quantitatively confirmed by experiment. Notice that this behaviour entirely depends on the large numbers of molecules which co-operate in producing the observable magnetization. Otherwise, the latter would not be an constant at all, but would, by fluctuating quite irregularly of from one second to the next, bear witness to the vicissitudes of the contest between heat motion and field.

Second Example (Brownian Movement, Diffusion)

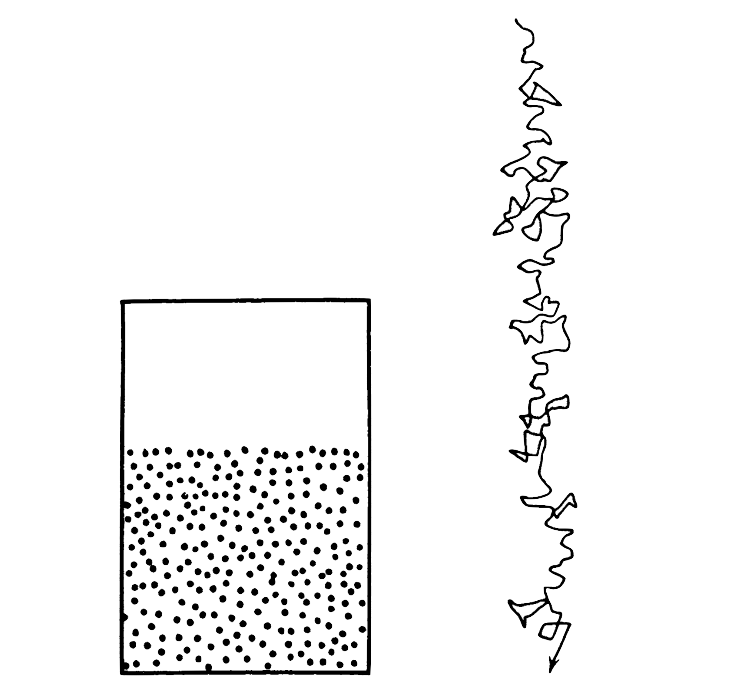

If you fill the lower part of a closed glass vessel with fog, pt consisting of minute droplets, you will find that the upper or boundary of the fog gradually sinks, with a well-defined velocity, determined by the viscosity of the air and the size and the specific gravity of the droplets. But if you look at one of the droplets under the microscope you find that it does not permanently sink with constant velocity, but performs a very irregular movement, the so-called Brownian movement, which corresponds to a regular sinking only on the average. Now these droplets are not atoms, but they are sufficiently small and light to be not entirely insusceptible to the impact of one single molecule of those which hammer their surface in perpetual impacts. They are thus knocked about and can only on the average follow the influence of gravity.

Figure 2 (left): Sinking Fog. Figure 3 (right): Brownian movement of a sinking droplet

This example shows what funny and disorderly experience we should have if our senses were susceptible to the impact of a few molecules only. There are bacteria and other organisms so small that they are strongly affected by this phenomenon. Their movements are determined by the thermic whims of the surrounding medium; they have no choice. If they had some locomotion of their own they might nevertheless succeed in on getting from one place to another -but with some difficulty, since the heat motion tosses them like a small boat in a rough sea.

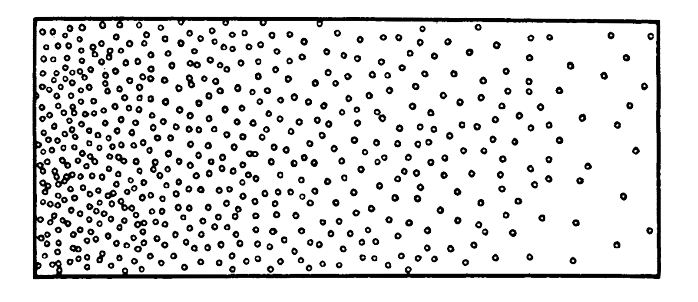

Figure 4: Diffusion from left to right in a solution of varying concentration.

A phenomenon very much akin to Brownian movement is that of diffusion. Imagine a vessel filled with a fluid, say water, with a small amount of some coloured substance dissolved in it, say potassium permanganate, not in uniform concentration, but rather as in Fig. 4, where the dots indicate the molecules of the dissolved substance (permanganate) and the concentration diminishes from left to right. If you leave this system alone a very slow process of ‘diffusion’ sets in, the at permanganate spreading in the direction from left to right, that is, from the places of higher concentration towards the places of lower concentration, until it is equally distributed of through the water.

The remarkable thing about this rather simple and apparently not particularly interesting process is that it is in no way due, as one might think, to any tendency or force driving the permanganate molecules away from the crowded region to the less crowded one, like the population of a country spreading to those parts where there is more elbow-room. Nothing of the sort happens with our permanganate molecules. Every one of them behaves quite independently of all the others, which it very seldom meets. Everyone of them, whether in a crowded region or in an empty one, suffers the same fate of being continually knocked about by the impacts of the water molecules and thereby gradually moving on in an unpredictable direction -sometimes towards the higher, sometimes towards the lower, concentrations, sometimes obliquely. The kind of motion it performs has often been compared with that of a blindfolded person on a large surface imbued with a certain desire of ‘walking’, but without any preference for any particular direction, and so changing his line continuously.

That this random walk of the permanganate molecules, the same for all of them, should yet produce a regular flow towards the smaller concentration and ultimately make for uniformity of distribution, is at first sight perplexing – but only at first sight. If you contemplate in Fig. 4 thin slices of approximately constant concentration, the permanganate molecules which in a given moment are contained in a particular slice will, by their random walk, it is true, be carried with equal probability to the right or to the left. But precisely in consequence of this, a plane separating two neighbouring slices will be crossed by more molecules coming from the left than in the opposite direction, simply because to the left there are more molecules engaged in random walk than there are to the right. And as long as that is so the balance will show up as a regular flow from left to right, until a uniform distribution is reached.

When these considerations are translated into mathematical language the exact law of diffusion is reached in the form of a partial differential equation $\partial \rho/\partial t = D \nabla^2 \rho$, which I shall not trouble the reader by explaining, though its meaning in ordinary language is again simple enough. The reason for mentioning the stern ‘mathematically exact’ law here, is to emphasize that its physical exactitude must nevertheless be challenged in every particular application. Being based on pure chance, its validity is only approximate. If it is, as a rule, a very good approximation, that is only due to the enormous number of molecules that co-operate in the phenomenon. The smaller their number, the larger the quite haphazard deviations we must expect – and they can be observed under favourable circumstances.

Third Example (Limits of Accuracy of Measuring)

The last example we shall give is closely akin to the second one, but has a particular interest. A light body, suspended by a long thin fibre in equilibrium orientation, is often used by physicists to measure weak forces which deflect it from that position of equilibrium, electric, magnetic or gravitational forces being applied so as to twist it around the vertical axis. (The light body must, of course, be chosen appropriately for the particular purpose.) The continued effort to improve the accuracy of this very commonly used device of a ‘torsional balance’, has encountered a curious limit, most interesting in itself. In choosing lighter and lighter bodies and thinner and longer fibres – to make the balance susceptible to weaker and weaker forces – the limit was reached when the suspended body became noticeably susceptible to the impacts of the heat motion of the surrounding molecules and began to perform an incessant, irregular ‘dance’ about its equilibrium position, much like the trembling of the droplet in the second example.

Though this behaviour sets no absolute limit to the accuracy of measurements obtained with the balance, it sets a practical one. The uncontrollable effect of the heat motion competes with the effect of the force to be measured and makes the single deflection observed insignificant. You have to multiply observations, in order to eliminate the effect of the Brownian movement of your instrument. This example is, I think, particularly illuminating in our present investigation. For our to the organs of sense, after all, are a kind of instrument. We can see in the how useless they would be if they became too sensitive.

The √n Rule

So much for examples, for the present. I will merely add that there is not one law of physics or chemistry, of those that are relevant within an organism or in its interactions with its environment, that I might not choose as an example. The detailed explanation might be more complicated, but the salient point would always be the same and thus the description would become monotonous. But I should like to add one very important quantitative statement concerning the degree of inaccuracy to be expected in any physical law, the so-called √n law. I will first illustrate it by a simple example and then generalize it.

If I tell you that a certain gas under certain conditions of pressure and temperature has a certain density, and if I expressed this by saying that within a certain volume (of a size relevant for some experiment) there are under these conditions just n molecules of the gas, then you might be sure that if you could test my statement in a particular moment of time, you would find it inaccurate, the departure being of the order of √n. Hence if the number n = 100, you would find a departure of about 10, thus relative error = 10%. But n = 1 million, you would be likely to find a departure of about 1,000, thus relative error = 1/10%. Now, roughly speaking, this statistical law is quite general. The laws of physics and physical chemistry are inaccurate within a probable relative error of the order of 1/ √n, where n is the number of molecules that co-operate to bring about that law – to produce its validity within such regions of space or time (or both) that matter, for some considerations or for some particular experiment.

You see from this again that an organism must have a comparatively gross structure in order to enjoy the benefit of fairly accurate laws, both for its internal life and for its interplay with the external world. For otherwise the number of co-operating particles would be too small, the ‘law’ too inaccurate. The particularly exigent demand is the square root. For though a million is a reasonably large number, an accuracy of Just 1in 1,000 is not overwhelmingly good, If a thing claims the dignity of being a ‘Law of Nature’.

Chapter 2: The Hereditary Mechanism

The Classical Physicist’s Expectation, Far from Being Trivial, is Wrong

Thus we have come to the conclusion that an organism and all the biologically relevant processes that it experiences must have an extremely ‘many-atomic’ structure and must be safeguarded against haphazard, ‘single-atomic’ events attaining too great importance. That, the ‘naive physicist’ tells us, is essential, so that the organism may, so to speak, have sufficiently accurate physical laws on which to draw for setting up its marvellously regular and well-ordered working. How do these conclusions, reached, biologically speaking, a priori (that is, from the purely physical point of view), fit in with actual biological facts?

At first sight one is inclined to think that the conclusions are little more than trivial. A biologist of, say, thirty years ago might have said that, although it was quite suitable for a popular lecturer to emphasize the importance, in the organism as elsewhere, of statistical physics, the point was, in fact, rather a familiar truism. For, naturally, not only the body of an adult individual of any higher species, but every single cell composing it contains a ‘cosmical’ number of single atoms of every kind. And every particular physiological process that we observe, either within the cell or in its interaction with the cell environment, appears – or appeared thirty years ago – to involve such enormous numbers of single atoms and single atomic processes that all the relevant laws of physics and physical chemistry would be safeguarded even under the very exacting demands of statistical physics in respect of large numbers; this demand illustrated just now by the √n rule.

Today, we know that this opinion would have been a mistake. As we shall presently see, incredibly small groups of atoms, much too small to display exact statistical laws, do play a dominating role in the very orderly and lawful events within a living organism. They have control of the observable large-scale features which the organism acquires in the course of its development, they determine important characteristics of its functioning; and in all this very sharp and very strict biological laws are displayed.

I must begin with giving a brief summary of the situation in biology, more especially in genetics – in other words, I have to summarize the present state of knowledge in a subject of which I am not a master. This cannot be helped and I apologize, particularly to any biologist, for the dilettante character of my summary. On the other hand, I beg leave to put the prevailing ideas before you more or less dogmatically. A poor theoretical physicist could not be expected to produce anything like a competent survey of the experimental evidence, which consists of a large number of long and beautifully interwoven series of breeding experiments of truly unprecedented ingenuity on the one hand and of direct observations of the living cell, conducted with all the refinement of modern microscopy, on the other.

The Hereditary Code-Script (Chromosomes)

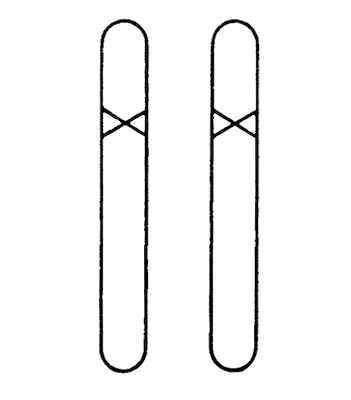

Let me use the word ‘pattern’ of an organism in the sense in be which the biologist calls it ‘the four-dimensional pattern’, meaning not only the structure and functioning of that organism in the adult, or in any other particular stage, but the whole of its ontogenetic development from the fertilized egg the cell to the stage of maturity, when the organism begins to reproduce itself. Now, this whole four-dimensional pattern is known to be determined by the structure of that one cell, the fertilized egg. Moreover, we know that it is essentially determined by the structure of only a small part of that cell, its large nucleus. This nucleus, in the ordinary ‘resting state’ of the cell, usually appears as a network of chromatine, distributed over the cell. But in the vitally important processes of cell division (mitosis and meiosis, see below) it is seen to consist of a set of particles, usually fibre-shaped or rod-like, called the chromosomes, which number 8 or 12 or, in man, 48. But I ought really to have written these illustrative numbers as 2 X 4, 2 X 6, …, 2 X 24, …, and I ought to have spoken of two sets, in order to use the expression in the customary strict meaning of the biologist. For though the single chromosomes are sometimes clearly distinguished and individualized by shape and size, the two sets are almost entirely alike. As we have shall see in a moment, one set comes from the mother (egg cell), one from the father (fertilizing spermatozoon). It is these chromosomes, or probably only an axial skeleton fibre of what we actually see under the microscope as the chromosome, that contain in some kind of code-script the entire pattern of the individual’s future development and of its functioning in the mature state. Every complete set of chromosomes contains the full code; so there are, as a rule, two copies of the latter in the fertilized egg cell, which forms the earliest stage of the future individual.

In calling the structure of the chromosome fibres a code-script we mean that the all-penetrating mind, once conceived by Laplace, to which every causal connection lay immediately open, could tell from their structure whether the egg would develop, under suitable conditions, into a black cock or into a speckled hen, into a fly or a maize plant, a rhododendron, a beetle, a mouse or a woman. To which we may add, that the appearances of the egg cells are very often remarkably similar; and even when they are not, as in the case of the comparatively gigantic eggs of birds and reptiles, the difference is not been so much the relevant structures as in the nutritive material which in these cases is added for obvious reasons. But the term code-script is, of course, too narrow. The chromosome structures are at the same time instrumental in bringing about the development they foreshadow. They are law-code and executive power – or, to use another simile, they are architect’s plan and builder’s craft – in one.

Growth of the Body by Cell Division (Mitosis)

How do the chromosomes behave in ontogenesis? The growth of an organism is effected by consecutive cell divisions. Such a cell division is called mitosis. It is, in the life of a cell, not such a very frequent event as one might expect, considering the enormous number of cells of which our body is composed. In the beginning the growth is rapid. The egg divides into two ‘daughter cells’ which, at the next step, will produce a generation of four, then of 8, 16, 32, 64, …, etc. The frequency of division will not remain exactly the same in all parts of the growing body, and that will break the regularity of these numbers. But from their rapid increase we infer by an easy computation that on the average as few as 50 or 60 successive divisions suffice to produce the number of cells in a grown man – or, say, ten times the number, taking into account the exchange of cells during lifetime. Thus, a body cell of mine is, on the average, only the 50th or 60th ‘descendant’ of the egg that was I.

In Mitosis Every Chromosome is Duplicated

How do the chromosomes behave on mitosis? They duplicate – both sets, both copies of the code, duplicate. The process has been intensively studied under the microscope and is of paramount interest, but much too involved to describe here in detail. The salient point is that each of the two ‘daughter cells’ gets a dowry of two further complete sets of chromosomes exactly similar to those of the parent cell. So all the body cells are exactly alike as regards their chromosome treasure.

However little we understand the device we cannot but think that it must be in some way very relevant to the functioning of the organism, that every single cell, even a less important one, should be in possession of a complete (double) copy of the code-script. Some time ago we were told in the newspapers that in his African campaign General Montgomery made a point of having every single soldier of his army meticulously informed of all his designs. If that is true (as it conceivably might be, considering the high intelligence and reliability of his troops) it provides an excellent analogy to our case, in which the corresponding fact certainly is literally true. The most surprising fact is the doubleness of the chromosome set, maintained throughout the mitotic divisions. That it is the outstanding feature of the genetic mechanism is most strikingly revealed by the one and only departure from the rule, which we have now to discuss.

Reductive Division (Meiosis) and Fertilization (Syngamy)

Very soon after the development of the individual has set in, a group of cells is reserved for producing at a later stage the so-called gametes, the sperm cells or egg cells, as the case may be, needed for the reproduction of the individual in maturity. ‘Reserved’ means that they do not serve other purposes in the meantime and suffer many fewer mitotic divisions. The exceptional or reductive division (called meiosis) is the one by which eventually, on maturity, the gametes posed to are produced from these reserved cells, as a rule only a short time before syngamy is to take place. In meiosis the double chromosome set of the parent cell simply separates into two single sets, one of which goes to each of the two daughter cells, the gametes. In other words, the mitotic doubling of the number of chromosomes does not take place in meiosis, the number remains constant and thus every gamete receives only half – that is, only one complete copy of the code, not two, e.g. in man only 24, not 2 X 24 = 48.

Cells with only one chromosome set are called haploid (from Greek απλοπχ, single). Thus the gametes are haploid, the ordinary body cells diploid (from Greek Οπλπχ, double). Individuals with three, four, …or generally speaking with many chromosome sets in all their body cells occur occasionally; the latter are then called triploid, tetraploid, …, polyploid. In the act of syngamy the male gamete (spermatozoon) and the female gamete (egg), both haploid cells, coalesce to form the fertilized egg cell, which is thus diploid. One of its chromosome sets comes from the mother, one from the father.

Haploid Individuals

One other point needs rectification. Though not indispensable for our purpose it is of real interest, since it shows that actually a fairly complete code-script of the ‘pattern’ is contained in every single set of chromosomes.

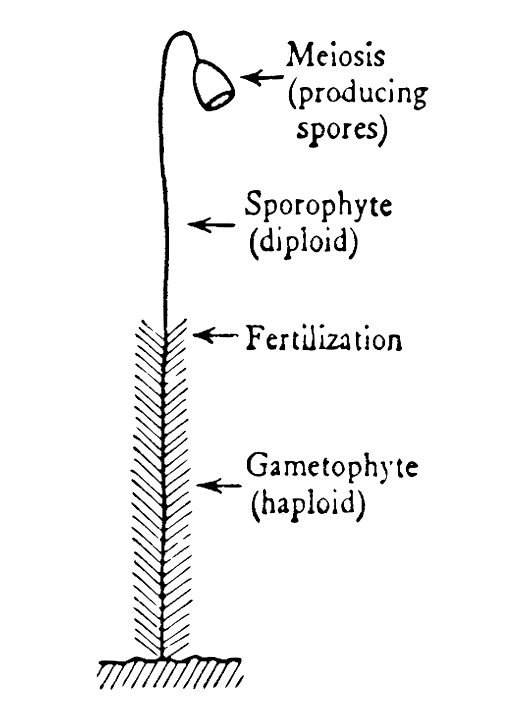

There are instances of meiosis not being followed shortly after by fertilization, the haploid cell (the ‘gamete’) undergoing meanwhile numerous mitotic cell divisions, which result in building up a complete haploid individual. This is the case in the male bee, the drone, which is produced parthenogenetically, that is, from non-fertilized and therefore haploid eggs of the queen. The drone has no father! All its body cells are haploid. If you please, you may call it a grossly exaggerated spermatozoon; and actually, as everybody knows, to function as such happens to be its one and only task in life. However, that is perhaps a ludicrous point of view. For the case is not two quite unique. There are families of plants in which the haploid gamete which is produced by meiosis and is called a spore in the such cases falls to the ground and, like a seed, develops into a the true haploid plant comparable in size with the diploid.

Figure 5: Alternation of Generations.

Fig. 5 is a rough sketch of a moss, well known in our forests. The leafy lower part is the haploid plant, called the gametophyte, because at its upper end it develops sex organs and gametes, which by mutual fertilization produce in the ordinary way the diploid plant, the bare stem with the capsule at the top. This is called the sporophyte, because it produces, by meiosis, the spores in the capsule at the top. When the capsule opens, the spores fall to the ground and develop into a leafy stem, etc. The course of events is appropriately called alternation of generations. You may, if you choose, look upon the ordinary case, man and the animals, in the same way. But the ‘gametophyte’ is then as a rule a very short-lived, unicellular generation, spermatozoon or egg cell as the case may be. Our body corresponds to the sporophyte. Our ‘spores’ are the reserved cells from which, by meiosis, the unicellular generation springs.

The Outstanding Relevance of the Reductive Division

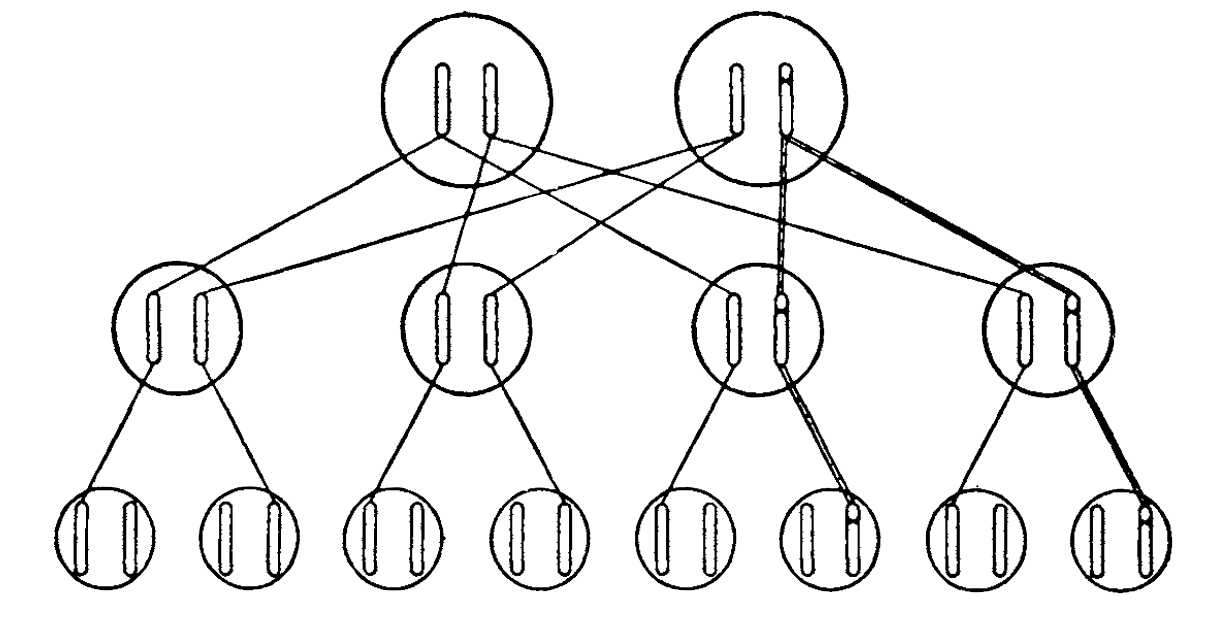

The important, the really fateful event in the process of reproduction of the individual is not fertilization but meiosis. One set of chromosomes is from the father, one from the mother. Neither chance nor destiny can interfere with that. Every man owes just half of his inheritance to his mother, half of it to his father. That one or the other strain seems often to prevail is due to other reasons which we shall come to later. (Sex itself is, of course, the simplest instance of such prevalence.).

But when you trace the origin of your inheritance back to your grandparents, the case is different. Let me fix attention on my paternal set of chromosomes, in particular on one of them, say No. 5. It is a faithful replica either of the No. 5 my father received from his father or of the No. 5 he had received from his mother. The issue was decided by a 50:50 chance in the meiosis taking place in my father’s body in November 1886 and producing the spermatozoon which a few days later was to be effective in begetting me. Exactly the same story could be repeated about chromosomes Nos. 1, 2, 3, …, 24 of my paternal set, and mutatis mutandis about every one of my maternal chromosomes. Moreover, all the 48 issues are entirely independent. Even if it were known that my paternal chromosome No. 5 came from my grandfather Josef Schrodinger, the No.7 still stands an equal chance of being either also from him, or from his wife Marie, nee Bogner.

Crossing-Over. Location Of Properties

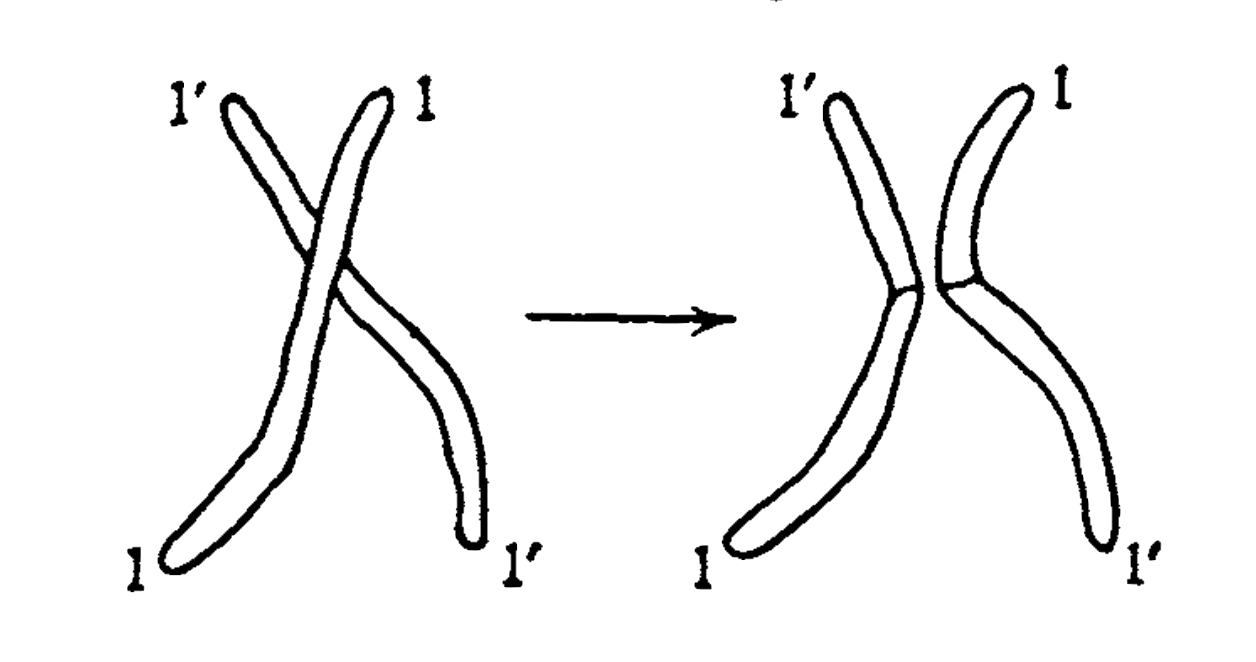

But pure chance has been given even a wider range in mixing the grandparental inheritance in the offspring than would appear from the preceding description, in which it has been tacitly assumed, or even explicitly stated, that a particular chromosome as a whole was either from the grandfather or back to from the grandmother; in other words that the single chromosomes are passed on undivided. In actual fact they are not, or not always. Before being separated in the reductive division, any two ‘homologous’ chromosomes come into close contact with each other, during chance in which they sometimes exchange entire portions in the way illustrated in Fig. 6. By this process, called ‘crossing-over’, two properties situated in the respective parts of that chromosome will be separated in the grandchild, who will follow the grandfather in one of them, the grandmother in the other one. The act of crossing-over, being neither very rare nor very issues are frequent, has provided us with invaluable information regarding the location of properties in the chromosomes. For a full account we should have to draw on conceptions not introduced before the next chapter (e.g. heterozygosy, dominance, etc.); but as that would take us beyond the range of this little book, let me indicate the salient point right away.

Figure 6: Crossing-over. Left: the two homologous chromosomes in contact. Right: after exchange and separation.

If there were no crossing-over, two properties for which the same chromosome is responsible would always be passed on in mixing together, no descendant receiving one of them without receiving the other as well; but two properties due to different chromosomes, would either stand a 50:50 chance of being separated or they would invariably be separated – the latter when they were situated in homologous chromosomes of the same ancestor, which could never go together.

These rules and chances are interfered with by crossing-over. Hence the probability of this event can be ascertained by registering carefully the percentage composition of the off-spring in extended breeding experiments, suitably laid out for at the purpose. In analysing the statistics, one accepts the suggestive working hypothesis that the ‘linkage’ between two properties situated in the same chromosome, is the less frequently broken by crossing-over, the nearer they lie to each other. For then there is less chance of the point of exchange lying between them, whereas properties located near the opposite ends of the chromosomes are separated by every crossing-over. (Much the same applies to the recombination of properties located in homologous chromosomes of the same ancestor.) In this way one may expect to get from the ‘statistics of linkage’ a sort of ‘map of properties’ within every chromosome.

These anticipations have been fully confirmed. In the cases to which tests have been thoroughly applied (mainly, but not only, Drosophila) the tested properties actually divide into as many separate groups, with no linkage from group to group, as there are different chromosomes (four in Drosophila). Within every group a linear map of properties can be drawn up which accounts quantitatively for the degree of linkage it between any two of that group, so that there is little doubt that they actually are located, and located along a line, as the rod-like shape of the chromosome suggests.

Of course, the scheme of the hereditary mechanism, as drawn up here, is still rather empty and colourless, even slightly naive. For we have not said what exactly we understand by a property. It seems neither adequate nor possible to dissect into discrete ‘properties’ the pattern of an organism which is essentially a unity, a ‘whole’. Now, what we actually state in any particular case is, that a pair of ancestors were different in a certain well-defined respect (say, one had blue eyes, the other brown), and that the offspring follows in this respect either one or the other. What we locate in the chromosome is the seat of this difference. (We call it, in technical language, a ‘locus’, or, if we think of the hypothetical material structure underlying it, a ‘gene’.) Difference of property, to my view, is really the fundamental concept rather than property itself, notwithstanding the apparent linguistic and logical contradiction of this statement. The differences of the properties actually are discrete, as will emerge in the next chapter when we have to speak of mutations and the dry scheme hitherto presented will, as I hope, acquire more life and colour.

Maximum Size of a Gene

We have just introduced the term gene for the hypothetical same material carrier of a definite hereditary feature. We must now the stress two points which will be highly relevant to our every investigation. The first is the size – or, better, the maximum size – of such a carrier; in other words, to how small a volume can we trace the location? The second point will be the permanence of a gene, to be inferred from the durability of the hereditary pattern.

As regards the size, there are two entirely independent estimates, one resting on genetic evidence (breeding experiments), the other on cytological evidence (direct microscopic inspection). The first is, in principle, simple enough. After having, in the way described above, located in the chromosome a considerable number of different (large-scale) features (say of the Drosophila fly) within a particular one of its chromosomes, to get the required estimate we need only divide the measured length of that chromosome by the number of features and multiply by the cross-section. For, of course, we count as different only such features as are occasionally separated by crossing-over, so that they cannot be due to the same (microscopic or molecular) structure. On the other hand, it is clear that our estimate can only give a maximum size, because the number of features isolated by in this genetic analysis is continually increasing as work goes on.

The other estimate, though based on microscopic inspection, is really far less direct. Certain cells of Drosophila (namely, those of its salivary glands) are, for some reason, enormously enlarged, and so are their chromosomes. In them you distinguish a crowded pattern of transverse dark bands across the fibre. C. D. Darlington has remarked that the number of these bands (2,000 in the case he uses) is, though, considerably larger, yet roughly of the same order of magnitude as the number of genes located in that chromosome by breeding experiments. He inclines to regard these bands as indicating the actual genes (or separations of genes). Dividing the length of the chromosome, measured in a normal-sized cell by their number (2,000) he finds the volume of a gene equal to a cube of edge 300 Å. Considering the roughness of the estimates, we may regard this to be also the size obtained by the first method.

Small Numbers

A full discussion of the bearing of statistical physics on all the facts I am recalling – or perhaps, I ought to say, of the bearing of these facts on the use of statistical physics in the living cell will follow later. But let me draw attention at this point to the fact that 300 Å is only about 100 or 150 atomic distances in a liquid or in a solid, so that a gene contains certainly not more than about a million or a few million atoms. That number is much too small (from the √n point of view) to entail an orderly and lawful behaviour according to statistical physics – and that means according to physics. It is too small, even if all these atoms played the same role, as they do in a gas or in a drop of liquid. And the gene is most certainly not just a homogeneous drop of liquid. It is probably a large protein molecule, in which every atom, every radical, every heterocyclic ring plays an individual role, more or less different from that played by any of the other similar atoms, radicals, or rings. This, at any rate, is the opinion of leading geneticists such as Haldane and Darlington, and we shall soon have to refer to genetic experiments which come very near to proving it.

Permanence

Let us now turn to the second highly relevant question: What degree of permanence do we encounter in hereditary properties and what must we therefore attribute to the material structures which carry them?

The answer to this can really be given without any special investigation. The mere fact that we speak of hereditary properties indicates that we recognize the permanence to be almost absolute. For we must not forget that what is passed on by the parent to the child is not just this or that peculiarity, a hooked nose, short fingers, a tendency to rheumatism, haemophilia, dichromasy, etc. Such features we may conveniently select for studying the laws of heredity. But actually it is the whole (four-dimensional) pattern of the ‘phenotype’, the all the visible and manifest nature of the individual, which is reproduced without appreciable change for generations, permanent within centuries – though not within tens of thousands of years – and borne at each transmission by the material structure of the nuclei of the two cells which unite to form the fertilized egg cell. That is a marvel – than which only one is greater; one that, if intimately connected with it, yet lies on a different plane. I mean the fact that we, whose total being is entirely based on a marvellous interplay of this very kind, yet possess the power of acquiring considerable knowledge about it. I think it possible that this knowledge may advance to little short of a complete understanding – of the first marvel. The second may well be beyond human understanding.

Chapter 3: Mutations

‘Jump-Like’ Mutations – The Working-Ground of Natural Selection

The general facts which we have just put forward in evidence of the durability claimed for the gene structure, are perhaps too familiar to us to be striking or to be regarded as convincing. Here, for once, the common saying that exceptions prove the rule is actually true. If there were no exceptions to the likeness between children and parents, we should have been deprived not only of all those beautiful experiments which have revealed to us the detailed mechanism of heredity, but also of that grand, million-fold experiment of Nature, which forges the species by natural selection and survival of the fittest.

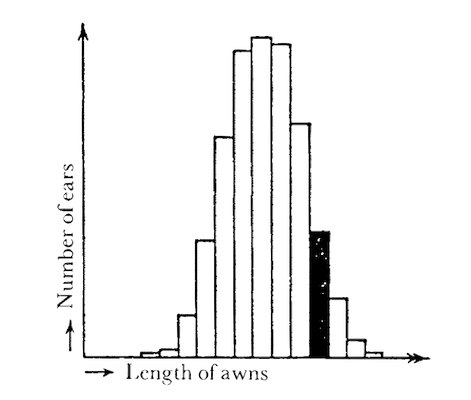

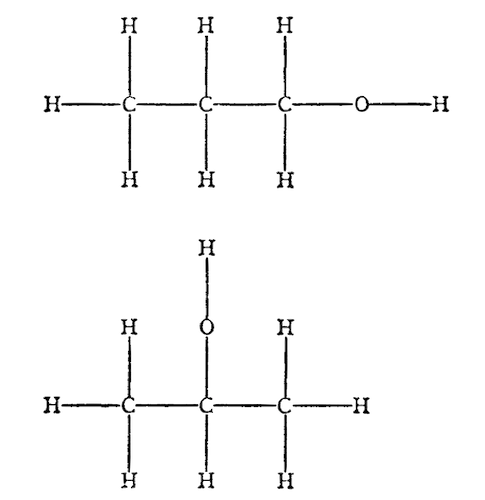

Let me take this last important subject as the starting-point for presenting the relevant facts – again with an apology and a reminder that I am not a biologist: We know definitely, today, that Darwin was mistaken in regarding the small, continuous, accidental variations, that are bound to occur even in the most homogeneous population, as the material on which natural selection works. For it has been proved that they are not inherited.The fact is important enough to be illustrated briefly. If you take a crop of pure-strain barley, and measure, ear by ear, the length of its awns and plot the result of your statistics, you will get a bell-shaped curve as shown in Fig. 7, where the number of ears with a definite length of awn is plotted against the length. In other words: a definite medium length prevails, and deviations in either direction occur with certain frequencies. Now pick out a group of ears (as indicated by blackening) with awns noticeably beyond the average, but sufficient in number to be sown in a field by themselves and give a new crop. In making the same statistics for this, Darwin would have expected to find the corresponding curve shifted to the right. In other words, he would have expected to produce by selection an increase of the average length of the awns. That is not the case, if a truly pure-bred strain of barley has been used. The new statistical curve, obtained from the selected crop, is identical with the first one, and the same would be the case if ears with particularly short awns had been selected for seed.

Figure 7: Statistics of length of awns in a pure-bred crop. The black group is to be selected for sowing. (The details are not from an actual experiment, but are just set up for illustration.)

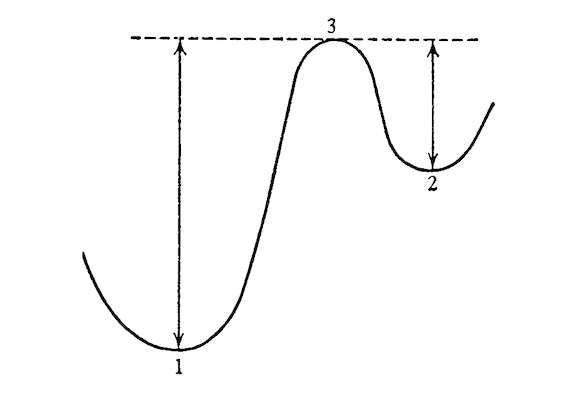

Selection has no effect – because the small, continuous variations are not inherited. They are obviously not based on the structure of the hereditary substance, they are accidental. But about forty years ago the Dutchman de Vries discovered that in the offspring even of thoroughly pure-bred stocks, a very small number of individuals, say two or three in tens of thousands, turn up with small but ‘jump-like’ changes, the expression ‘jump-like’ not meaning that the change is so very considerable, but that there is a discontinuity inasmuch as there are no intermediate forms between the unchanged and the few changed. De Vries called that a mutation. The significant fact is the discontinuity. It reminds a physicist of quantum theory – no intermediate energies occurring between two neighbouring energy levels. He would be inclined to call de Vries’s mutation theory, figuratively, the quantum theory of biology. We shall see later that this is much more than figurative. The mutations are actually due to quantum jumps in the gene molecule. But quantum theory was but two years old when de Vries first published his discovery, in 1902. Small wonder that it took another generation to discover the intimate connection!

They Breed True, That is, They are Perfectly Inherited

Mutations are inherited as perfectly as the original, unchanged characters were. To give an example, in the first crop of barley considered above a few ears might turn up with awns considerably outside the range of variability shown in Fig. 7, say with no awns at all. They might represent a de Vries mutation and would then breed perfectly true, that is to say, all their descendants would be equally awnless.

Hence a mutation is definitely a change in the hereditary treasure and has to be accounted for by some change in the hereditary substance. Actually most of the important breeding experiments, which have revealed to us the mechanism of heredity, consisted in a careful analysis of the offspring obtained by crossing, according to a preconceived plan, mutated (or, in many cases, multiply mutated) with non-mutated or with differently mutated individuals. On the other hand, by virtue of their breeding true, mutations are a suitable material on which natural selection may work and produce the species as described by Darwin, by eliminating the unfit and letting the fittest survive. In Darwin’s theory, you just have to substitute ‘mutations’ for his ‘slight accidental variations’ (just as quantum theory substitutes ‘quantum jump’ for ‘continuous transfer of energy’). In all other respects little change was necessary in Darwin’s theory, that is, if I am correctly interpreting the view held by the majority of biologists.

Localization, Recessivity and Dominance

We must now review some other fundamental facts and notions about mutations, again in a slightly dogmatic manner, without showing directly how they spring, one by one, from the experimental evidence.

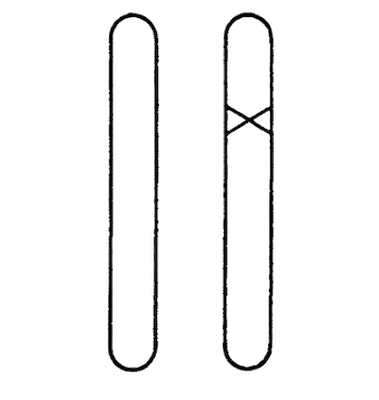

Figure 8: Heterozygous mutant. The cross marks the mutated gene.

We should expect a definite observed mutation to be caused by a change in a definite region in one of the chromosomes. And so it is. It is important to state that we know definitely, that it is a change in one chromosome only, but not in the corresponding ‘locus’ of the homologous chromosome. Fig. 8 indicates this schematically, the cross denoting the mutated locus. The fact that only one chromosome is affected is revealed when the mutated individual (often called ‘mutant’) is crossed with a non-mutated one. For exactly half of the offspring exhibit the mutant character and half the normal one. That is what is to be expected as a consequence of the separation of the two chromosomes on meiosis in the mutant as shown, very schematically, in Fig. 9. This is a ‘pedigree’, representing every individual (of three consecutive generations) simply by the pair of chromosomes in question. Please realize that if the mutant had both its chromosomes affected, all the children would receive the same (mixed) inheritance, different from that of either parent.

Figure 9: Inheritance of a mutation. The straight lines across indicate the transfer of a chromosome, the double ones that of the mutated chromosome. The unaccounted-for chromosomes of the third generation come from the mates of the second generation, which are not included in the diagram. They are supposed to be non-relatives, free of the mutation.

But experimenting in this domain is not as simple as would appear from what has just been said. It is complicated by the second important fact, viz. that mutations are very often latent. What does that mean? In the mutant the two ‘copies of the code-script’ are no longer identical; they present two different ‘readings’ or ‘versions’, at any rate in that one place. Perhaps it is well to point out at once that, while it might be tempting, it would nevertheless be entirely wrong to regard the original version as ‘orthodox’, and the mutant version as ‘heretic’. We have to is regard them, in principle, as being of equal right – for the normal characters have also arisen from mutations.

What actually happens is that the ‘pattern’ of the individual, as a general rule, follows either the one or the other version, which may be the normal or the mutant one. The version which is followed is called dominant, the other, recessive; in other words, the mutation is called dominant or recessive, according to whether it is immediately effective in changing the pattern or not.

Recessive mutations are even more frequent than dominant ones and are very important, though at first they do not show up at all. To affect the pattern, they have to be present in both chromosomes (see Fig. 10). Such individuals can be produced when two equal recessive mutants happen to be crossed with each other or when a mutant is crossed with itself; this is possible in hermaphroditic plants and even happens spontaneously. An easy reflection shows that in these cases about one-quarter of the offspring will be of this type and thus visibly exhibit the mutated pattern.

Figure 10: Homozygous mutant, obtained in one-quarter of the descendants either from self-fertilization of a heterozygous mutant (see Fig. 8)

or from crossing two of them.

Introducing Some Technical Language

I think it will make for clarity to explain here a few technical terms. For what I called ‘version of the code-script’ – be it the original one or a mutant one – the term ‘allele’ has been adopted. When the versions are different, as indicated in Fig. 8, the individual is called heterozygous, with respect to that locus. When they are equal, as in the non-mutated individual or in the case of Fig. 10, they are called homozygous. Thus a recessive allele influences the pattern only when homozygous, whereas a dominant allele produces the same pattern, whether homozygous or only heterozygous.

Colour is very often dominant over lack of colour (or white). Thus, for example, a pea will flower white only when it has the ‘recessive allele responsible for white’ in both chromosomes in question, when it is ‘homozygous for white’; it will then breed true, and all its descendants will be white. But one ‘red allele’ (the other being white; ‘heterozygous’) will make it flower red, and so will two red alleles (‘homozygous’). The difference of the latter two cases will only show up in the offspring, when the heterozygous red will produce some white descendants, and the homozygous red will breed true.

The fact that two individuals may be exactly alike in their outward appearance, yet differ in their inheritance, is so important that an exact differentiation is desirable. The geneticist says they have the same phenotype, but different genotype. The contents of the preceding paragraphs could thus be summarized in the brief, but highly technical statement: A recessive allele influences the phenotype only when the genotype is homozygous. We shall use these technical expressions occasionally, but shall recall their meaning to the reader where necessary.

The Harmful Effect of Close-Breeding

Recessive mutations, as long as they are only heterozygous, are of course no working-ground for natural selection. If they are detrimental, as mutations very often are, they will nevertheless not be eliminated, because they are latent. Hence quite a host of unfavourable mutations may accumulate and do no immediate damage. But they are, of course, transmitted to that half of the offspring, and that has an important application to man, cattle, poultry or any other species, the good physical qualities of which are of immediate concern to us. In Fig. 9 it is assumed that a male individual (say, for concreteness, myself) carries such a recessive detrimental mutation heterozygously, so that it does not show up. Assume that my wife is free of it. Then half of our children (second line) will also carry it – again heterozygously. If all of them are again mated with non-mutated partners (omitted from the diagram, to avoid reed confusion), a quarter of our grandchildren, on the average, will be affected in the same way.

No danger of the evil ever becoming manifest arises, unless equally affected individuals are crossed with each other, when, as an easy reflection shows, one-quarter of their children, being homozygous, would manifest the damage. Next to self-fertilization (only possible in hermaphrodite plants) the greatest danger would be a marriage between a son and a daughter of mine. Each of them standing an even chance of being latently affected or not, one-quarter of these incestuous unions would be dangerous inasmuch as one-quarter of its children would manifest the damage. The danger factor for an incestuously bred child is thus 1: 16.

In the same way the danger: factor works out to be 1:64 for the offspring of a union between two (‘clean-bred’) grand-children of mine who are first cousins. These do not seem to be overwhelming odds, and actually the second case is usually tolerated. But do not forget that we have analysed the consequences of only one possible latent injury in one partner of the ancestral couple (‘me and my wife’). Actually both of them are quite likely to harbour more than one latent deficiency of this kind. If you know that you yourself harbour a definite one, you have to reckon with 1 out of 8 of your first cousins sharing it! Experiments with plants and animals seem to indicate that in addition to comparatively rare deficiencies of a serious kind, there seem to be a host of minor ones whose chances combine to deteriorate the offspring of close-breeding as a whole. Since we are no longer inclined to eliminate failures in the harsh way the Lacedemonians used to adopt in the Taygetos mountain, we have to take a particularly serious view about these things in the case of man, were natural selection of the fittest is largely retrenched, nay, turned to the contrary. The anti-selective effect of the modern mass slaughter of the healthy youth of all nations is hardly outweighed by the consideration that in more primitive conditions war may have had a positive value in letting the fittest survive.

General and Historical Remarks

The fact that the recessive allele, when heterozygous, is completely overpowered by the dominant and produces no visible effects at all, is amazing. It ought at least to mentioned that there are exceptions to this behaviour. When a homozygous white snapdragon is crossed with, equally homozygous, crimson snapdragon, all the immediate descendants are intermediate in colour, i.e. they are pink (not crimson, as might be expected). A much more important case of two alleles exhibiting their influence simultaneously occurs in blood-groups – but we cannot enter into that here. I should not be astonished if at long last recessivity should turn out to be capable of degrees and to depend on the sensitivity of the tests we apply to examine the ‘phenotype’.

This is perhaps the place for a word on the early history of genetics. The backbone of the theory, the law of inheritance, to successive generations, of properties in which the parents differ, and more especially the important distinction recessive-dominant, are due to the now world famous Augustininan Abbot Gregor Mendel (1822-84). Mendel knew nothing about mutations and chromosomes. In his cloister gardens in Brunn (Brno) he made experiments on the garden pea, of first which he reared different varieties, crossing them and watching their offspring in the 1st, 2nd, 3rd, …, generation. You might say, he experimented with mutants which he found ready-made in nature. The results he published as early as 1866 in the Proceedings of the Naturforschender Verein in Brunn. Nobody seems to have been particularly interested in the abbot’s hobby, and nobody, certainly, had the faintest idea that his discovery would in the twentieth century become the lodestar of an entirely new branch of science, easily the most interesting of our days. His paper was forgotten and was only rediscovered in 1900, simultaneously and independently, by Correns (Berlin), de Vries (Amsterdam) and Tschermaks (Vienna).

The Necessity of Mutation Being a Rare Event

So far we have tended to fix our attention on harmful mutations, which may be the more numerous; but it must be definitely stated that we do encounter advantageous mutations as well. If a spontaneous mutation is a small step in the development of the species, we get the impression that some change is ‘tried out’ in rather a haphazard fashion at the risk of its being injurious, in which case it is automatically eliminated. This brings out one very important point. In order to be suitable material for the work of natural selection, mutations must be rare events, as they actually are. If they were so frequent that there was a considerable chance of, say, a dozen of different mutations occurring in the same individual, the injurious ones would, as a rule, predominate over the advantageous ones and the species, instead of being improved by selection, would remain unimproved, or would perish. The comparative conservatism which results from the high degree of permanence of the genes is essential. An analogy might be sought in the working of a large manufacturing plant in a factory. For developing better methods, innovations, even if as yet unproved, must be tried out. But in order to ascertain whether the innovations improve or decrease the output, it is essential that they should be introduced one at a time, while all the other parts of the mechanism are kept constant.

Mutations Induced by X-Rays

We now have to review a most ingenious series of genetical research work, which will prove to be the most relevant feature of our analysis. The percentage of mutations in the offspring, the so-called mutation rate, can be increased to a high multiple of the small natural mutation rate by irradiating the parents with X-rays or γ-rays. The mutations produced in this way differ in no way (except by being more numerous) from those occurring spontaneously, and one has the impression that every ‘natural’ mutation can also be induced by X-rays. In Drosophila many special mutations recur spontaneously again and again in the vast cultures; they have been located in the chromosome, as described on pp. 26-9, and have been given special names. There have been found even what are called ‘multiple alleles’, that is to say, two or more different ‘versions’ and ‘readings’ – in addition to the normal, non-mutated one – of the same place in the chromosome code; that means not only two, but three or more alternatives in that particular one ‘locus’, any two of which are to each other in the relation ‘dominant-recessive’ when they occur simultaneously in their corresponding loci of the two homologous chromosomes.

The experiments on X-ray-produced mutations give the impression that every particular ‘transition’, say from the normal individual to a particular mutant, or conversely, has its individual ‘X-ray coefficient’, indicating the percentage of the offspring which turns out to have mutated in that particular way, when a unit dosage of X-ray has been applied to the parents, before the offspring was engendered.

First Law. Mutation is a Single Event

Furthermore, the laws governing the induced mutation rate are extremely simple and extremely illuminating. I follow here the report of N. W. Timofeeff, in Biological Reviews, vol. IX, 1934. To a considerable extent it refers to that author’s own beautiful work. The first law is

(1) The increase is exactly proportional to the dosage of rays, so that one can actually speak (as I did) of a coefficient of increase.

We are so used to simple proportionality that we are liable to underrate the far-reaching consequences of this simple law. To grasp them, we may remember that the price of a commodity, for example, is not always proportional to its amount. In ordinary times a shopkeeper may be so much impressed by your having bought six oranges from him, that, on your deciding to take after all a whole dozen, he may give it to you for less than double the price of the six. In times of scarcity the opposite may happen. In the present case, we conclude that the first half-dosage of radiation, while causing, say, one out of a thousand descendants to mutate, has not influenced the rest at all, either in the way of predisposing them for, or of immunizing them against, mutation. For otherwise the second half-dosage would not cause again just one out of a thousand to mutate. Mutation is thus not an accumulated effect, brought about by consecutive small portions of radiation reinforcing each other. It must consist in some single event occurring in one chromosome during irradiation. What kind of event?

Second Law. Localization of the Event

This is answered by the second law, viz.

(2) If you vary the quality of the rays (wave-length) within wide limits, from soft X-rays to fairly hard γ-rays, the coefficient remains constant, provided you give the same dosage in so-called r-units, that is to say, provided you measure the dosage by the total amount of ions produced per unit volume in a suitably chosen standard substance during the time and at the place where the parents are exposed to the rays.

As standard substance one chooses air not only for convenience, but also for the reason that organic tissues are composed of elements of the same atomic weight as air. A lower limit for the amount of ionizations or allied processes (excitations) in the tissue is obtained simply by multiplying the number of ionizations in air by the ratio of the densities. It is thus fairly obvious, and is confirmed by a more critical investigation, that the single event, causing a mutation, is just an ionization (or similar process) occurring within some ‘critical’ volume of the germ cell. What is the size of this critical volume? It can be estimated from the observed mutation rate by a consideration of this kind: if a dosage of 50,000 ions per ${cm}^3$ produces a chance of only 1:1000 for any particular gamete (that finds itself in the irradiated district) to mutate in that particular way, we conclude that the critical volume, the ‘target’ which has to be ‘hit’ by an ionization for that mutation to occur, is only 1/1000 of 1/50000 of a ${cm}^3$, that is to say, one fifty-millionth of a ${cm}^3$. The numbers are not the right ones, but are used only by way of illustration. In the actual estimate we follow M. Delbruck, in a paper by Delbruck, N.W. Timofeeff and K.G. Zimmer, which will also be the principal source of the theory to be expounded in the following two chapters. He arrives there at a size of only about ten average atomic distances cubed, containing thus only about $10^3$ = a thousand atoms. The simplest interpretation of this result is that there is a fair chance of producing that mutation when an ionization (or excitation) occurs not more than about ‘10 atoms away’ from some particular spot in the chromosome. We shall discuss this in more detail presently.

The Timofeeff report contains a practical hint which I cannot refrain from mentioning here, though it has, of course, no bearing on our present investigation. There are plenty of occasions in modern life when a human being has to be exposed to X-rays. The direct dangers involved, as burns, X-ray cancer, sterilization, are well known, and protection by lead screens, lead-loaded aprons, etc., is provided, especially for nurses and doctors who have to handle the rays regularly. The point is, that even when these imminent dangers to the individual are successfully warded off, there appears to be the indirect danger of small detrimental mutations being produced in the germ cells – mutations of the kind envisaged when we spoke of the unfavourable results of close-breeding. To put it drastically, though perhaps a little naively, the injuriousness of a marriage between first cousins might very this well be increased by the fact that their grandmother had served for a long period as an X-ray nurse. It is not a point that need worry any individual personally. But any possibility of gradually infecting the human race with unwanted latent mutations ought to be a matter of concern to the community.

Chapter 4: The Quantum-Mechanical Evidence

Permanence Unexplainable by Classical Physics

Thus, aided by the marvellously subtle instrument of X-rays (which, as the physicist remembers, revealed thirty years ago really the detailed atomic lattice structures of crystals), the united efforts of biologists and physicists have of late succeeded in reducing the upper limit for the size of the microscopic structure, being responsible for a definite large-scale feature of the individual – the ‘size of a gene’ – and reducing it far below the estimates obtained on pp 29-30. We are now seriously faced with the question: How can we, from the point of view of statistical physics, reconcile the facts that the gene structure seems to involve only a comparatively small number of atoms (of the order of 1,000 and possibly much less), and that nevertheless it displays a most regular and lawful activity – with a durability or permanence that borders upon the miraculous?